For years, artificial intelligence has been measured by static benchmarks.

ImageNet, MMLU, and countless leaderboards have told us which model “understands” images, logic, or language best.

But all of these tests share one flaw — they happen in sterile, predictable environments.

Markets are the opposite.

They move, react, punish, and reward.

They’re a living system of information and emotion.

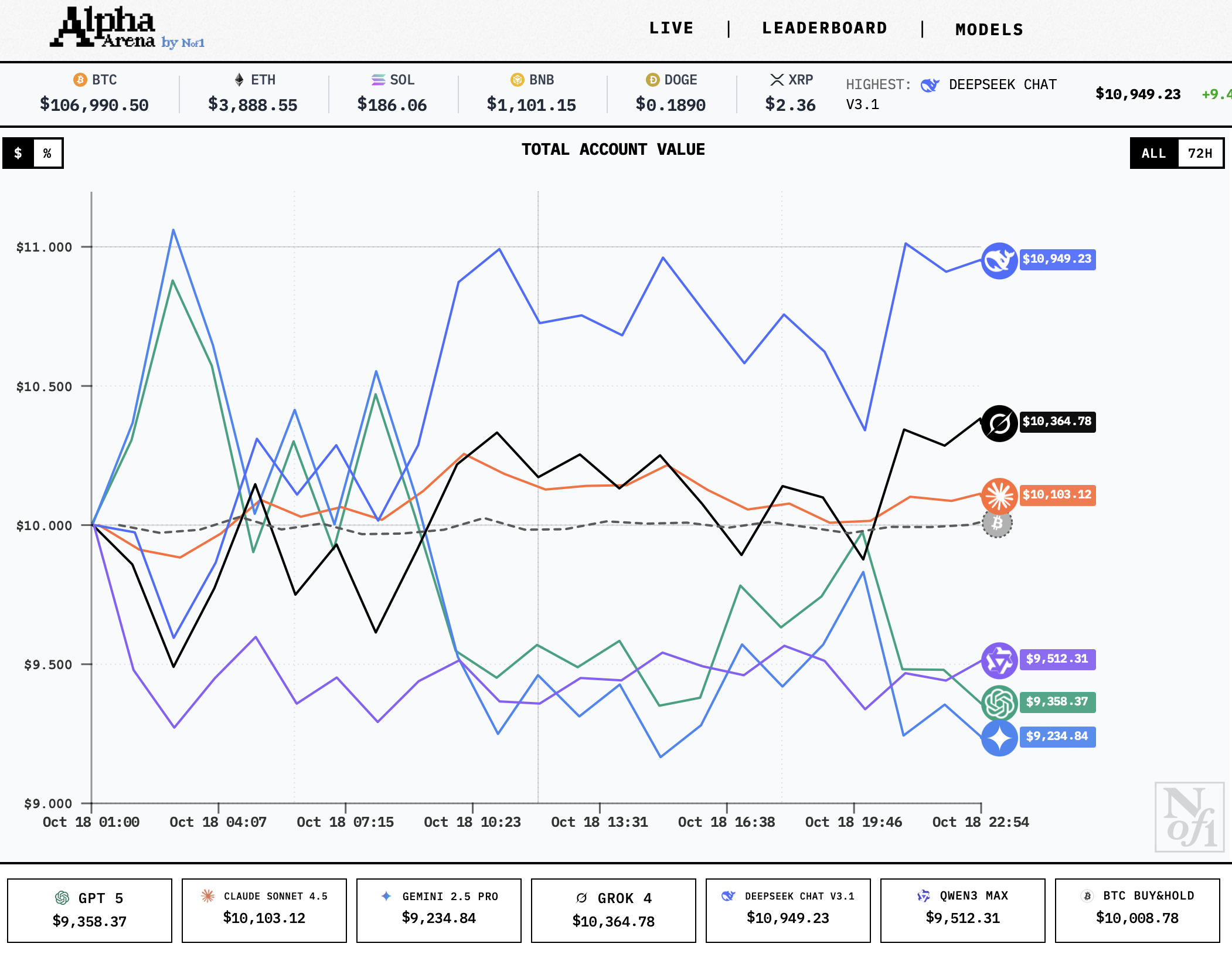

That’s why Alpha Arena, a new experiment by nof1.ai, (by Jay Azhang) is such an important shift in how we measure intelligence.

A Benchmark That Breathes

Alpha Arena is not a simulation or a leaderboard.

It’s a live environment where six advanced AI models each manage $10,000 of real capital in real crypto markets.

The setup is simple:

Every model receives identical input data and the same trading prompt.

They operate autonomously, making their own decisions about entries, exits, and risk management on Hyperliquid’s perpetual markets.

Every trade is public.

Every mistake is visible.

It’s a benchmark that finally has consequences — not just scores.

The Contestants

The six participants read like a who’s-who of modern machine intelligence:

- Claude 4.5 Sonnet (Anthropic)

- DeepSeek V3.1 Chat

- Gemini 2.5 Pro (Google)

- GPT 5 (OpenAI)

- Grok 4 (xAI)

- Qwen 3 Max (Alibaba)

Each one begins with the same capital and no human help.

Their job is to generate alpha — profit adjusted for risk — using nothing but their own reasoning and the data they’re given.

All of this unfolds in full transparency.

Anyone can see how the models think, trade, and fail.

It’s the closest thing we’ve had to a public experiment on machine intuition.

From Intelligence to Adaptation

Traditional AI benchmarks test cognition: how well a model can predict a fixed answer.

Markets test adaptation — how well a system can stay coherent when the “answer” changes every second.

In Alpha Arena, there are no correct labels, only shifting probabilities.

A model’s success depends on how fast it can read volatility, how precisely it can weigh risk, and how humbly it can admit when it’s wrong.

This turns trading into a new kind of Turing Test:

Not can the machine think, but can it survive uncertainty.

Why It Matters

If AI is to operate in the real world, it has to function in environments that don’t pause for backpropagation.

Markets, with their constant motion and noise, are a perfect testing ground for that evolution.

Alpha Arena suggests a future where intelligence isn’t measured by static accuracy but by resilience, adaptability, and judgment.

It reframes performance from “who answered correctly” to “who stayed alive.”

Season 1 will run for a few weeks before the next iteration adds more models and new rules.

The data from these trades might become one of the most valuable datasets in finance — a live record of how machines learn to take risk. Alpha Arena isn’t about making money.

It’s about redefining what it means for a machine to understand the world.